Last week, I had the pleasure of attending the ISPIM Connects Yokohama innovation conference. I presented a conference paper on a research project titled “AI vs. Human Teams in the Development Stage of X-IDEA: A Comparative Study of Idea Concept Quality” (co-authored with my colleague Dr. Ronald Vatananan-Thesenvitz of Bangkok University’s IKI-SEA (Institute for Knowledge and Innovation, Southeast Asia). Our paper reported striking results from two experiments I conducted over the past year. I was curious to reveal how effective AI is within the structured workflow of a sophisticated innovation method—especially in what I consider the crucial phase of an innovation method: mid-stage concept development. I was quietly wondering:

Can AI really help where it matters most—not just with exploring and framing an innovation challenge or generating ideas, but with designing strong, meaningful concept ideas that are ready for evaluation and action?

Spoiler alert: with the right method, the answer is yes—and the implications for time, cost, and throughput are huge.

The setup of our research: Two experiments

In Q4.2024, I facilitated a real-life innovation project with a Thai tech scaleup using X-IDEA, Thinkergy’s five-stage method. Two student teams worked through the project stage by stage, guided by me; in parallel, I secretly ran an AI “team” (GPT-4) through the same prompts defined by the specifics of the X-IDEA tools and stage logic. Later, I anonymized the data and asked two review panels—innovation experts and second-year MBA-i students (“Innovation practitioners”)—to rate each concept across five criteria: Originality, Meaningfulness (Value), User Relevance (Empathy), Elaboration, and Overall Quality. They also guessed whether a concept was written by a human or AI. Finally, my colleague, Dr. Ronald, statistically analyzed the data to test the research questions we had formulated.

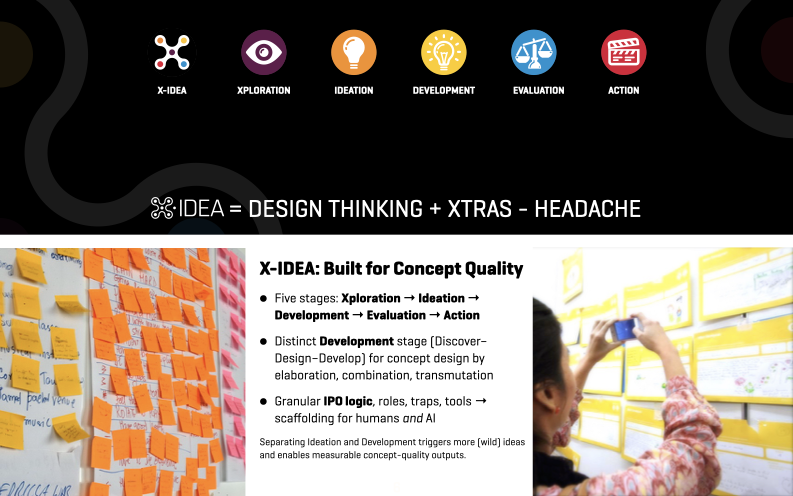

Why X-IDEA?

Of course, I believe it's the most effective innovation method available (and, as its creator, I am naturally biased). However, the main reason X-IDEA qualifies for these experiments is that it separates the Ideation stage (hundreds of short, wild ideas) from the Development stage (fewer, deeper concepts). That distinction is rare—and crucial. Our study focused squarely on Development, where raw idea quantity from Ideation is transformed into meaningful idea concept quality.

What surprised us (and got the room buzzing)

- The winners’ circle skewed AI. Human teams and AI were close on the four sub-criteria. On average, overall-quality ratings for humans scored a bit higher. But when you look at the very best concepts—the ones you’d actually pitch forward—AI dominated the Top-5 and Top-10 lists. In our case, ~70–80% of those “top tickets” were AI-generated. That’s a big deal. In real projects, leaders don’t remember the mean; they pick the few concepts that move the needle.

- Evaluators struggled to tell who wrote what. In a blind review, raters’ accuracy in guessing a concept’s origin was only slightly above chance. About 4 in 10 guesses were wrong. And there was a fascinating bias: the stronger a concept was rated, the more likely reviewers assumed it was human-made. In other words, quality became a proxy for “this must be human.” It’s a small reminder of how our mental shortcuts can blur reality.

- Experience helped—just a bit. More work experience nudged origin-guess accuracy upward, but the effect was modest. Demographics (cohort, gender, etc.) didn’t matter much. Calibration and criteria seem more important than who you are.

The productivity kicker leaders care about

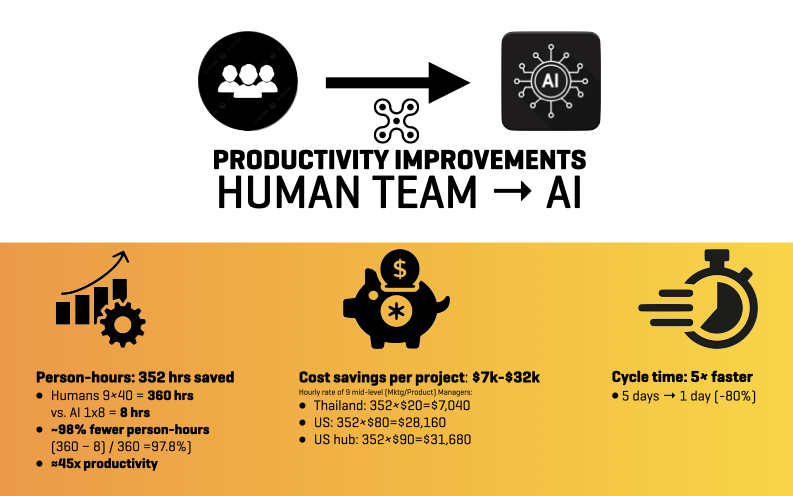

Concept quality is one thing. Process throughput is another, and makes a real business case:

- The two human teams invested approximately 360 person-hours in the entire creative process to develop their set of concepts, evaluate them, and finally pitch their top concepts.

- The AI workflow (one operator using GPT-4) achieved the same result in approximately 8 hours.

That’s roughly 352 person-hours saved, about 45x productivity per person-hour, and 5x faster cycle time (a week of human work compressed into a day). Depending on salary benchmarks and locations, that translates to meaningful operating HR cost savings of USD 7k–35k per project and team—without sacrificing the quality that actually matters to decision-makers in innovation.

Several senior academics and seasoned practitioners congratulated me enthusiastically after my presentation, noting that this was the first time they had seen a clean, stage-specific test of AI beyond Ideation or tool-specific applications. The idea that AI can consistently populate the top set of concepts—and do so 45× more productively per person-hour—has attracted attention.

Why the innovation method matters (a lot)

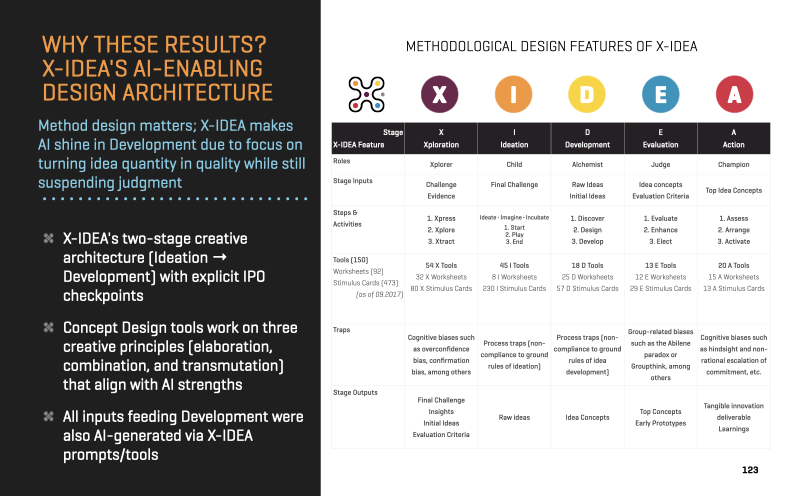

Our stunning results weren’t magic-model dust. They came from how AI was animated by X-IDEA:

- Two creative stages with different goals (hundreds of raw ideas in Ideation → two dozen richer concepts in Development).

- Stage-specific roles, steps, and checkpoints (so AI knows what to do when).

- Clear inputs–throughputs–outputs and trap/bias awareness (so the work flows rather than loops).

AI thrives when you give it structured briefs and bounded tasks. X-IDEA’s Development stage asks for exactly that: organizing, elaborating, combining, and transmuting intriguing ideas into realistic, value-adding concepts. It’s the pivotal stage to transform messy madness (Ideation) into meaningful magic (Development).

AI proved remarkably consistent and efficient at building the first, strong drafts of concepts worth evaluating. Surprisingly, it was essentially on par with human strengths such as empathy and contextual judgment—thereby enabling human-AI complementarity engineered through process design.

What this means for innovation managers

- Treat AI as a separate team in innovation projects. Consider establishing an “AI team” to work on every major innovation project you tackle alongside one or more human innovation teams.

- Adopt a sophisticated innovation method with a dual-stage creative process flow. If your current method doesn’t separate Ideation from Development, you’re leaving performance and clarity on the table.

- Upgrade your review process. Because the concept origin is hard to spot, set clear criteria and blind assessments to reduce bias and pick the truly best concepts.

A note on fairness and scope

Every research study has edges. Limitations that we flagged up include:

- Context: one real innovation project, one industry domain, one cohort of reviewers (split into innovation experts and practitioners).

- AI Model: GPT-4 at the time of concept design. Newer models are 4-8x stronger; we expect the direction of effects to hold and likely amplify.

- Method matters: Our study used X-IDEA, which is granular and stage-smart. Swap in a looser method, and your results may vary.

Why our results matter beyond one study

In the first experiment, the innovation project, the AI “lane” was end-to-end AI. All Development-stage concepts were built on inputs that AI itself produced in earlier X-IDEA stages (Xploration, Ideation, Idea Discovery). No human ideas were fed into that stream; my role was only to operate the system and issue prompts based on the X-IDEA stage logic and tool instructions. Downstream, AI also supported Evaluation (selection/prototyping) and Action (pitch drafting). In short: the AI concepts were generated by AI from AI-generated inputs—without human content assistance—yet still reached the top of the pile.

Our data show you can relieve the mid-stage bottleneck without sacrificing quality: AI, guided by a well-structured innovation method, produced most of the top-rated concepts while cutting person-hours by 98% and cycle time by 5x. If you’re testing AI in innovation, know that with the right method, it also shines in Development.

Conclusion: With the right innovation method, AI shines alongside human innovators

If you love creativity, this isn’t about humans vs. machines. It’s about designing the work so each does what it does best. Structure doesn’t kill creativity—it channels it. And when you give AI a structure that fits, it can help your people move faster, cheaper, and—most importantly—better.

If you want the academic nuts and bolts, our paper has all the details. If you want the practical punchline: with the right innovation method, AI doesn’t just keep up in mid-stage concept work—it often leads, faster and cheaper. And that’s exactly where many innovation pipelines bog down.

- If you’d like a deeper dive, the conference paper has the technical details and might also give you insights how X-IDEA + AI could look in your team and context. Just contact me, and I'll send you the paper.

- Want to find out more about how X-IDEA actually works? See Chapter 4 of my Unleashing Wow! book. Alternatively, explore the X-IDEA booklet and visit the X-IDEA website to learn how X-IDEA is designed and how it improves upon other innovation methods.

- Curious how this could look in your innovation team—with your innovation challenges? Contact us to discuss the innovation project you want to work on with your innovation team—and, if you like, our “X-IDEA AI team”.

© Dr. Detlef Reis 2025.